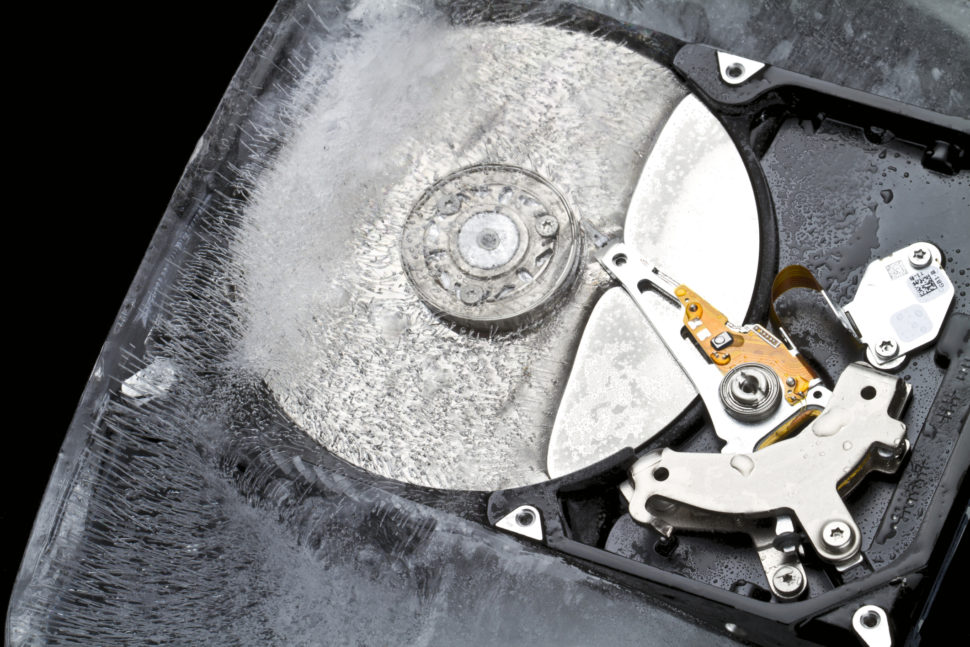

Among a flurry of storage-related announcements at this year’s Google Cloud Next conference is what Google calls “ice cold storage.” This class of archive-based cloud storage, designed for long-term data retention, uses the same APIs used by Google’s other storage methods for access and management. In other words, it is aimed at data that is truly dormant, without need for access of any type.

Some early generations of “cold”, “deep cold” or “icy” storage tiers and classes have been plagued by delays while waiting for data to be available for restore. In addition, there are often multiple steps involved based on policies similar to traditional hierarchical storage management (HSM) on-premise approaches. Google’s approach, which eliminates the need for a separate retrieval process and provides immediate, low-latency access to content, is an effort to avoid those issues and keep up with industry trends, said Greg Schulz, senior advisory analyst at StorageIO.

Th is new class of Google storage is worth being evaluated, Schulz said, with some caveats.

“It’s important to look beyond the cost per capacity and factor in the retrieval time–how long you wait for your data order to be ready–in addition to data transfer time–how fast you can move data,” he explained. It’s also important to understand the economics–not only the cost, but also additional fees for access, including data movement, listing and other metadata operations. Google has announced pricing starting at $1.23 per TB per month.

In addition to ice cold storage, Google has announced that Cloud Filestore, a managed file storage system, is now available and has increased read performance up to 1.2 GB/s throughput and 60k IOPS. By offering its own NFS file-based cloud storage access, Google joins other cloud providers in making their cloud storage platforms more compatible with customer applications, instead of forcing customers to change or adapt to the cloud.

Google also announced the availability of Regional Persistent Disk, which provides active-active synchronous disk replication across two zones in the same region. By placing the storage geographically closer to where the data is access by applications, users are likely to see better reliability as well as performance.

The company also has increased throughput limits per instance for Google Persistent Disk to 240 MB/s for writes and 240 MB/s for reads. In addition, the number of Persistent Disks that can be attached to a VM has increased to 128.